New Research Leads To Improved Visual Processing Using Cameras that Learn

Current autonomous travel technologies rely greatly on a combination of digital cameras and graphics processing units designed to render graphics for video games. A problem is that these systems typically transmit a great deal of unnecessary visual information between sensors and processors. For example, an autonomous vehicle might process the details of trees on the side of the road – extra information that consumes power and takes processing time. A team of robotics and artificial intelligence researchers from the UK are developing camera systems that learn what they are viewing. This enables the systems to block out unnecessary data in real-time and, in doing so, greatly speeds up the processing of visual information.

According to interestingengineering.com, the team is trying to develop a different approach for more efficient vision intelligence in machines. The research is part of a collaboration between the University of Manchester and the University of Bristol in the United Kingdom. Two separate papers from the collaborations have shown how sensing and machine learning can be combined to create new types of cameras for AI systems.

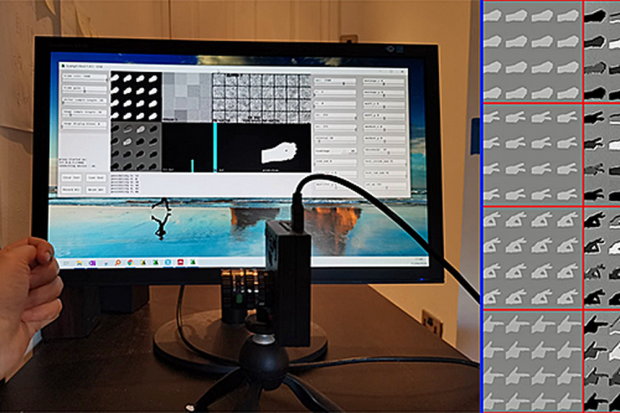

The research points towards a future of intelligent AI cameras that can filter data before it is sent for processing. “We can borrow inspiration from the way natural systems process the visual world – we do not perceive everything – our eyes and our brains work together to make sense of the world and in some cases, the eyes themselves do processing to help the brain reduce what is not relevant,” Walterio Mayol-Cuevas, Professor in Robotics, Computer Vision and Mobile Systems at the University of Bristol explained in a press release. Their papers detail the implementation of Convolutional Neural Networks (CNNs) — a form of AI algorithm that enables visual understanding — over the image plane. The CNNs are able to classify frames at thousands of times per second, without ever having to record those frames or send them down the processing pipeline.

The work uses the SCAMP architecture developed by Piotr Dudek, Professor of Circuits and Systems and PI from the University of Manchester, and his team. SCAMP is a camera-processor chip that has interconnected processors embedded in each and every pixel. Professor Dudek said: “Integration of sensing, processing, and memory at the pixel level is not only enabling high-performance, low-latency systems, but also promises low-power, highly efficient hardware. For more information see also this iHLS summary and watch these videos. Our thanks to Robin E. Alexander, President ATC, alexander technical[at]gmail[dot]com, for her assistance with this report.